- Sharepoint 2013 Crawl

- Crawl Rules Sharepoint 2013

- Sharepoint Full Crawl

- Search Crawl Sharepoint

- Crawl Rules In Sharepoint 2013 Template

- Crawl Rules Sharepoint 2013 Example

Several things haven’t changed in 2013 SharePoint search. Search is still uses a componentized model that is still based on a Shared Services architecture. Simply stated, you still provision a Search Service Application + Proxy. The Search Admin UI is exposed by clicking on the Search Service Application via Central AdministratorApplication ManagementManage Service Applications. As I stated in the introductory blog, the search engine still runs under mssearch.exe and invokes daemon process during crawl to go retrieve content. The act of fetching content works very similarly as it did in SharePoint 2010. Finally, the crawler still isn’t responsible for the index itself.

This blog series will go through what’s changed with Crawl and Feed and more details about what components make up the Crawl and Feed portion of a crawl. I’ll write about how these components work together plus Architecture and Scaling these components.

Crawl and Feed Components Basics

Listed below are the reasons why and under what circumstances should a SharePoint farm Administrator perform a full search crawl: 1.You just created a new Search Service application and the default content source (i.e. Local SharePoint sites) that gets created along with the newly created Search service application hasn’t been crawled yet. For more information, see Manage crawl rules in SharePoint Server 2013. To crawl the default zone for a SharePoint Web application When you crawl the default zone for a SharePoint Web application, the query processor automatically maps and returns the search result URL that corresponds to the alternate access mapping (AAM) that executes the query. If the incremental crawl failed to crawl content for errors, Incremental crawl removes the content from index until next full crawl. When a software update for SharePoint or service pack is installed on servers in the farm, full crawl is required.

The most important thing I want you to take away from this blog is that we have now split the crawl and feed into two components in SharePoint 2013. In SharePoint 2010, this was all handled by the crawl component. That is the crawl component was responsible for not only fetching the content but also extracting metadata, links etc… during crawl by using a variety of plugins all running under the context of MSSSearch.exe. As data would pass through plug-ins, index would be built in memory on the crawler and would propagate this over to the Query Component in order to be indexed.

Now with SharePoint 2013, we use both the Crawl Component and the Content Processing Component for this purpose. The Crawl Component still fetches data but instead of processing it and extracting metadata, links, and other rich information, we simply pass crawled items over to the Content Processing Component to do this work which runs under Noderunner.exe. One could say that mssearch is half the process it used to be because this additional processing has been stripped from it and given to another component/process. I don’t want to downplay it’s importance though because without crawl, you have no index, no search. Plus, several improvements have been made to the crawler because we are offloading a lot of this work and implementing more common sense approaches to crawl like the two new crawl types (Continuous Crawl and Cleanup Crawl).

Crawl ComponentCrawl Database and Distribution

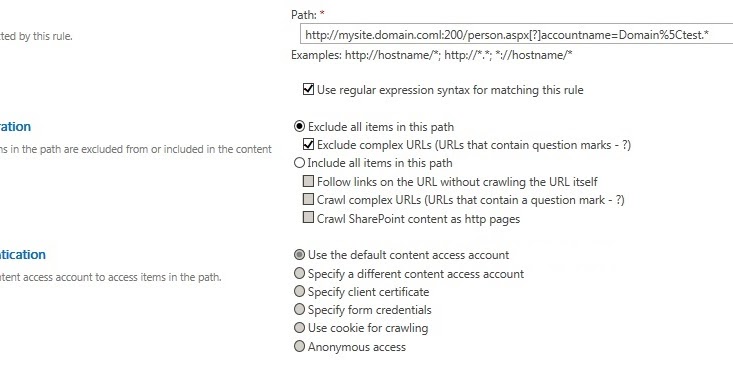

For more information, see Manage crawl rules in SharePoint Server 2013. To crawl the default zone for a SharePoint Web application When you crawl the default zone for a SharePoint Web application, the query processor automatically maps and returns the search result URL that corresponds to the alternate access mapping (AAM) that executes the query. Only one crawl rule will ever be active for content, and it will activate based on how close it is to the top of the list. All content inside the 'forms' directory will therefore hit the first rule and go through, while all other content will be blocked. Excluding URLs from Sharepoint Foundation 2013 Search.

A crawler consists of a crawl component and a crawl database. Back in SharePoint 2010, you have a unique relationship in that a crawl component maps to a unique crawl database. That relationship doesn’t exists in SharePoint 2013 Search so a crawl component will automatically communicate with all crawl databases if there is more than one. This is such a cool new concept to take in because in SharePoint 2010 search, requiring the mapping of a crawl component to a crawl database often resulted in lopsided database sizes.

For Example in SharePoint 2010:

Crawl Component 1 —-> CrawlDB1

Crawl Component 2 —-> CrawlDB2

Assuming crawl component 1 is hosting a very large host defined as a content source, you could easily end up in the lopsided database size where Crawl DB1 is much larger than Crawl DB2. This required more administrative effort in that you would often times implement host distribution rules to override this default behavior. This is also why it didn’t make since to have a secondary crawl database when only one crawl component exists. If you would like to know more about this see my original 2010 Search Crawl blog here.

In SharePoint 2013, all crawl components use all crawl databases so we no longer have a unique mapping between a crawl component and crawl database. A single large host is pushed out across multiple crawl databases. Yes, one crawl component can utilize multiple crawl databases for a single host.

Important Note: This is only true if that large single host (web application) is utilizing more than one content database and/or the SharePoint administrator manually invokes a rebalance.

This is an important note because in SharePoint 2013, we no longer distribute crawl items to crawl DB’s based on URL. We now automatically distribute crawl items based on content database ID. So if I have a single large host (web application) that is utilizing two content databases, the associated crawl items will be distributed to a unique crawl database assuming you have at least two crawl DB’s. Crawl distribution is an interesting topic that brings up many questions so I added some sample questions and answers below to fill any technical gaps.

Question and Answer Time

Question: What do you mean we assign crawl items to crawl databases based on database id?

Answer: Each web app is associated to at least one content database. So if I have two web apps, then I have two content databases. During a crawl with two crawl databases, all host associated with content database 1 will be assigned to crawl database 1. All host associated with content database 2 will be assigned to crawl database 2.

Question: Can I provision one crawl component and multiple crawl databases and all crawl databases will be used?

Answer: Yes in the following scenarios:

Scenario 1: Assuming you have more than one content database! The crawler will automatically distribute crawl items across all crawl databases based on content database id although it’s a good idea to provision a second crawl component for redundancy reasons.

Scenario 2: Let’s assume I have one web applicationsingle content database that is considered an extremely large host. Let’s say 11,000,000 links are stored in a single crawl store database from this single host. I can create a secondary crawl database and forklift a % of crawled links over to the new crawl store database. This is a manual process performed by the SharePoint Search Administrator by leveraging some new properties associated with the Search Service Application called the CrawlStoreImbalanceThreshold and CrawlPartitionSplitThreshold properties. By default both of these properties are set to 10,000,000 which means that a rebalance won’t be available for an Administrator to execute until a crawl store database contains greater than 10,000,000 links. These properties are configurable and it’s possible to lower the threshold through Power Shell. Once an administrator kicks off a rebalance in this scenario, it will distribute items from a single host across both crawl store databases. For more information, please see the following blog.

Question: I provisioned a new secondary crawl database and new items being crawled are still using the original crawl database instead of the new one. Why is this happening?

Answer: After provisioning a new crawl database, all previously crawled content will still be mapped to the original crawl database. This is a fancy way of saying the content database ID’s were already mapped to the original crawl database so any new items picked up that reside in one of these content databases will still be utilizing the original crawl database. In order to get some content databases reassigned to the new crawl database, perform one of the following options:

Option 1: Perform a full crawl.

Option 2: Perform a rebalance by following Scenario 2 from the previous question.

Question: Will the second crawl database get utilized if I create a new web application/site collection/site?

Answer: Not at first because continuous crawl will not pick up new items from newly created web applications until the cleanup crawl runs. This is basically an incremental crawl and runs by default every 4 hours. The second crawl database will start populating after the incremental in this case and subsequent continuous crawls will start picking up new items from the new web app and add them to Crawl database 2 only after the incremental runs.

Question: Can I provision multiple crawl components with a single crawl database?

Answer: Yes, you can provision crawl topology this way as well however assuming your crawling less than 20 million items which is the recommended limit per Crawl database.

Scaling Crawl for Fault Tolerance and Performance

In SharePoint 2013, both fault tolerance and performance are gained with the addition of new crawl components. In order to gain fault tolerance back in SharePoint 2010, two crawl components (one mirrored) would need to be provisioned per crawl database. Since we no longer have a unique relationship between crawl components and crawl databases, you automatically gain fault tolerance by simply provisioning a new crawl component. In a scenario where you have 3 crawl components, if any one crawl component goes down, the remaining crawl components will pick up the slack.

As far as performance, the benefit received by adding redundant crawl components means more documents per second (dps) are processed. Yes, you can crawl more aggressively with two crawl components over one crawl component. What really defines whether or not you require one crawl components or several components depends on a variety of factors. Things like the following:

1. How often are items changed or added to sites?

2. How aggressively are you crawling?

3. Are you satisfied with crawl freshness times?

4. How powerful is the hardware hosting your crawl component?

5. Do you require redundancy?

The rule of thumb for observing whether or not you need to provision additional crawl components for performance reasons is usually due to high CPU. During a crawl, the CPU load rises in conjunction with high DPS (documents per second). Sometimes this might be acceptable but if it’s consistently pegging CPU, it might be time to offload some of this processing by provisioning a secondary crawl component on a different server. It’s also recommended to monitor network load and disk load to ensure they aren’t bottlenecks during a crawl. The network load is generated when content is downloaded by the crawler from hosts. The disk load is generated when we temporarily store these crawled items which are later picked up by the Content Processing Component. Again, I suspect the most likely scenario for provisioning additional crawl components is the high CPU + DPS during crawls.

Question: What about scaling out the associated Crawl Database?

Answer: In terms of performance, Microsoft recommends one crawl database per 20 million items. So if I have a 100 million item index, I need 5 crawl databases. In terms of redundancy with crawl databases, we leverage both SQL mirroring or SQL AlwaysOn.

Sharepoint 2013 Crawl

Question: Where do I provision additional crawl components and additional crawl databases?

Answer: This is now all done through PowerShell. So if you need to update the current Search Topology by adding a crawl component, you essentially clone it into a new topology and add your desired components and this is all done within PowerShell. Please see the resources section for more information.

Crawl Behind the Scenes (Advanced)

When a crawl starts, MSSearch.exe invokes a Daemon process called MSSDmn.exe. This loads the required protocol handlers necessary to connect, fetch the content and hands it off to MSSearch.exe for further processing. Initially, the mssdmn.exe process calls a sites sitedata web service using the “GetChanges” SOAP action to request/receive the latest changes. The site data web service resides within IIS under a site’s _vti_bin directory.

The art of fetching the latest changes is done by comparing the latest change the crawler has processed which lives in the Crawl DB’s msschangelogcookies table and compares that to the event cache table of the associated content database. Once the changes are returned from IIS to the mssdmn.exe process, it will go and fetch the changes and after successfully retrieving, passes them off to mssearch.exe for further processing. This process will rinse and repeat until all of the changes are processed. Great tools to see these transactions behind the scenes are fiddler, uls logs, and network monitor.

Feeding Behind the Scenes (Advanced)

The process of feeding crawled items from the crawl component to the content processing component is an interesting one. First, remember I mentioned that the search process back in 2010 consisted of multiple plugins to extract data and index that data. In SharePoint 2013, only one plugin exists called the Content Plugin and it’s responsible for routing crawled items over to the content processing component. Also, in order for the crawl component to feed the content processing component a connection is established with the content processing component and session is created. One way to look behind the scenes of crawled items being fed by the crawl component to the content processing component is to turn the SharePoint Server SearchCrawler to verbose in Central Administrator. The following is an example of what this looks like in the ULS logs:

Crawl Rules Sharepoint 2013

12/02/2012 16:56:57.14 mssearch.exe (0x0340) 0x0CB8 SharePoint Server Search Crawler:Content Plugin ai6x1 Verbose CSSFeeder::SubmitCurrentBatch: submitting document doc id ssic://11; doc size 9909;

12/02/2012 16:56:57.14 mssearch.exe (0x0340) 0x0CB8 SharePoint Server Search Crawler:Content Plugin ai6x1 Verbose CSSFeeder::SubmitCurrentBatch: submitting document doc id ssic://12; doc size 9281;

12/02/2012 16:57:00.14 mssearch.exe (0x0340) 0x0CB8 SharePoint Server Search Crawler:Content Plugin af7y6 Verbose CSSFeedersManager::CallbackReceived: Suceess = True strDocID = ssic://11

12/02/2012 16:57:00.14 mssearch.exe (0x0340) 0x0CB8 SharePoint Server Search Crawler:Content Plugin af7y6 Verbose CSSFeedersManager::CallbackReceived: Suceess = True strDocID = ssic://12

Sharepoint Full Crawl

The following information I picked up from my homey Brian Pendergrass, thanks Brian!

It’s important to understand that during this feeding, the entire crawled item isn’t sent. So during crawl, the crawler will temporarily store crawl items on local disks as blobs. What the crawl component feeds the content processing component is metadata of the crawled item and a pointer to the blob sitting in the network share. At a later time, the content processing component will go retrieve the associated blob and start processing it.

Thanks,

Russ Maxwell, MSFT

As I learn all about SharePoint 2013 Administration this week at SharePoint911 – SharePoint 2013 Administration Training, one thing stands out above the rest: search. Search could really be a week long class in itself, but we did dedicate 4 hours to it today. In 2013 Search has undergone an extreme makeover: SharePoint Edition. One of the new features that I heard about, but didn’t really understand until today was this new concept of a continuous crawl. Is it really continuous? In SharePoint 2010 we had 2 options: Incremental or Full. You could schedule an incremental crawl to go every 15 minutes, but if it exceeded that 15 minute mark it would wait until the next 15 minute block before starting another incremental crawl. For example: Let’s say we schedule 15 minute incremental search crawls on a 2010 farm. The first crawl completes in 15 minutes, but the second crawl completes in 20 minutes. SharePoint 2010 would actually wait 10 minutes before kicking off another incremental crawl.

The OLD Way:

| 15 minutes | 20 Minutes | 10 Minutes No Crawl | 15 Minutes |

Search Crawl Sharepoint

The NEW Way:

Crawl Rules In Sharepoint 2013 Template

| 15 minutes | 20 minutes | 15 minutes | 15 minutes |

Crawl Rules Sharepoint 2013 Example

This is really just a continuous incremental crawl, but names don’t always match up in 2013 (SkyDrive vs. SkyDrive Pro, Apps (As in Lists, Document Libraries, etc.) or Cloud Apps (As in development). Some other fun search facts for 2013 are as follows: Search Best Bets and Enterprise Keywords are dead (replaced by query rules), instant search (pre-query suggestions) are awesome, no more search scopes (replaced by result sources), and most admin level search work is now done in PowerShell, but pretty much all other items are done at the site collection level. SharePoint 2013 is the real deal and definitely is one search to rule them all in the Enterprise…long live Google Search appliances.